IAM Best Practices

AWS Identity and Access Management (IAM) is an AWS-wide solution for authentication, authorization, administration and identity-store related processes. With IAM you can specify how and if users and services can access specific resources. IAM policies simplify the application of the principle of least privilege by allowing you to define granular access control across your entire AWS infrastructure. IAM underpins all AWS services and their ability to interact. By default, AWS entities start with no access rights whatsoever until granted permissions within IAM. Learning how to leverage IAM best practices is essential to basic security hygiene and an easy way to increase your cloud security.

Executive summary

There are many ways to leverage IAM to improve security, but the important thing to remember is that IAM should be considered during all development lifecycle phases. Below is a quick summarization of the best practices that this article aims to cover in detail.

| Multi-Factor Authentication (MFA) | Ensure that MFA is enabled for all AWS console users |

| Minimize users | Minimize the number of AWS console users – use roles instead |

| Least Privilege | Only provide users and groups with the minimum required access level. Explicitly defining what resources users can access helps to eliminate security risks |

| Review access and access controls | Make sure password policies are strict. Enforce strong passwords that expire at reasonable intervals. Regularly perform IAM audits. Remove unused users, and groups |

| Embedding keys | Never store access keys or credentials in code. Have a process in place should this occur |

Multi-Factor Authentication

The importance of strong passwords is not exclusive to cloud security, but the cloud can provide attackers with a wider platform from which to launch further attacks should your first lines of defense fail. A single password is no longer sufficient. Multi-factor authentication provides an extra level of security by requiring additional information (or factor) that only a legitimate user has access to. The second factor should not be something that the user knows (such as a password), as this can be guessed or brute-forced. Instead, it should be something that the user physically possesses, like a one-time password (OTP) or something that uniquely identifies them, like a scanned fingerprint. You can enable MFA for users through the AWS console.

Minimize the number of users

The AWS root user has full access to all AWS services and resources – it should therefore never be used for everyday tasks. In their best practices guide, AWS encourages you to avoid using root unless absolutely necessary. AWS recommends that the root account only be used for updating billing information, upgrading or downgrading support, configuring marketplace or creating other users.

AWS uses the concept of users and roles to help manage access to AWS resources, but each is designed for different purposes. Users are assigned access tokens that provide explicit access to services. Service roles on the other hand, are a set of permissions used by an AWS service to access other services.

This makes IAM Users the perfect choice for employee AWS accounts. You just assign the permissions that they’ll need to access services, and this can be done in the AWS console, or via the AWS CLI. Even better, you can assign a user to a previously created group with all of the correct permissions in place.

Roles are more important when architecting an AWS solution with multiple resources. In theory, you could hardcode user credentials into Lambda or EC2, and they would work, but this exposes multiple security threats. If an outsider obtained your credentials they could use them from anywhere, even from outside your compute instance, making it harder to subsequently lock down. Should a disgruntled employee obtain further credentials, they could carry out a privilege escalation attack – executing actions under the guise of the original user. By defining a role with specific permissions and assigning that role to a service (such as Lambda or EC2), those services can access the resources they need without access keys.

|

Platform

|

Provisioning Automation |

Security Management |

Cost Management |

Regulatory Compliance |

Powered by Artificial Intelligence |

Native Hybrid Cloud Support

|

|---|---|---|---|---|---|---|

|

AWS Native Tools |

✔

|

✔

|

✔

|

|||

|

CoreStack

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

Least privilege

When creating any policy, always apply the principle of least privilege. Only provide the minimum necessary access to any given resource, and only provide this to the users or services that require it. Defining your roles and permissions in code and then provisioning them via AWS CloudFormation can help dramatically. By using Infrastructure as Code, you can version control your entire architecture and subject every resource to peer-based code-review, before it reaches production.

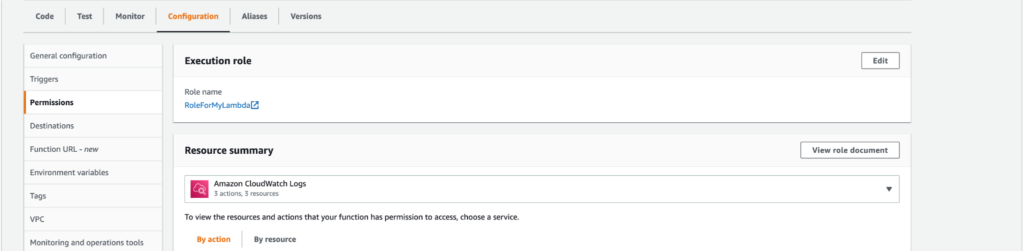

A lambdas role definition can be viewed within the AWS console by navigating to the lambdas configuration tab and clicking the View role document button.

The role definition can be viewed directly through the AWS console

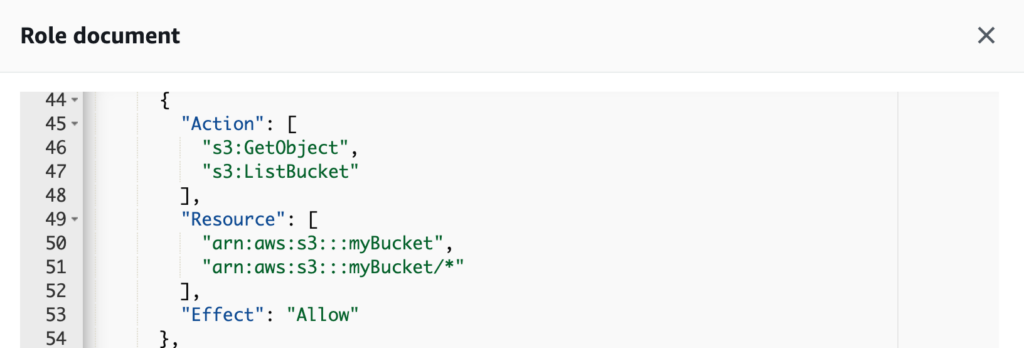

The role definition will then be displayed in a modal view, similar to the following:

A role document specifies what actions can or can not be performed against a set of resources

Consider the following role definition:

RoleForMyLambda:

Type: "AWS::IAM::Role"

Properties:

- PolicyName: My Policy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "s3:*"

Resource:

- "arn:aws:s3:::*"

We can see that it allows any lambda (or other service) that is assigned this role to perform any (*) action on any S3 bucket. This is likely not required though, and after a code review, the role might evolve to the following:

RoleForMyLambda:

Type: "AWS::IAM::Role"

Properties:

- PolicyName: My Policy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "s3:*"

Resource:

- "arn:aws:s3:::myBucket"

- "arn:aws:s3:::myBucket/*"

This is a little better as we are limiting the scope of the permissions to a specific bucket and its contents. At this stage, we need to define what the Lambda function actually needs to do with this S3 bucket. If it never needs to write to the bucket, why should we risk giving it permissions to do so?

RoleForMyLambda:

Type: "AWS::IAM::Role"

Properties:

- PolicyName: My Policy

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "s3:GetObject"

- "s3:ListBucket"

Resource:

- "arn:aws:s3:::myBucket"

- "arn:aws:s3:::myBucket/*"

This iteration looks much better. We have applied the principle of least privilege and have continued to reduce permissions until it can only perform the actions it needs against specific resources. In this case, the Lambda function assigned this role may only perform get and list actions on a specific bucket. If at any time the policy becomes too restrictive, version control will allow us to quickly roll it back.

In some cases, you might not know the exact name of the resource when you write the policy. For example, you might want to assign what is essentially the same policy, but to a specific AWS account or user. Creating multiple policies, where the only difference between them is the resource will work, but you can achieve the same outcome using policy variables instead. This feature allows you to specify placeholders, which are replaced using request context. Never solve this problem by using “*” variables.

Review access and access controls

Users, roles and policies should be reviewed on a regular basis. Accounts that are no longer used or needed should be de-provisioned as soon as possible. A formalized JML (joiner/mover/leaver) process for handling the lifecycle of an employee is a necessity for any large organization. For tracking access, AWS provides multiple services that help protect your organization and identify gaps in your security:

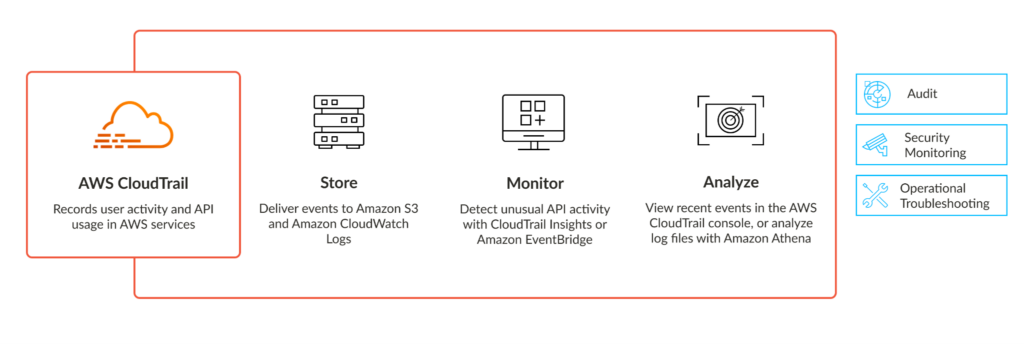

AWS CloudTrail

CloudTrail records all user activity and API calls across all AWS services. All events are delivered to S3 and CloudWatch, which allows for advanced monitoring via CloudTrail Insights and Amazon Athena. You can even use ML models that are trained to spot unusual activity. AWS has published a number of CloudTrail best practices that you can follow, but one of the most important things to consider is where log files are exported. Ideally, they should be sent to a central location which can be locked down to ensure integrity.

AWS CloudTrail monitors and records account activity across your AWS infrastructure

Access Analyzer

Access Analyzer uses AWS CloudTrail logs to track actions and services that have been used by users and roles. It can also be used to generate an IAM policy based on access activity alone. For example, if a user only reads from, but never writes to an S3 bucket, the access analyzer can generate a policy which only grants read access.

Embedding keys

Your security controls may be in vain if your AWS access keys are not managed with care. The Hacker News recently published a story showing that over 40 apps with more than 100 million installs were leaking AWS keys. The possibilities for deliberate misuse are endless, so it's important to stay vigilant and always:

- peer review application and infrastructure code. N*2 eyes are better than 2. An essential part of code review is looking for logic that could be exploited for misuse. For example, if hardcoded credentials are found, this should be flagged and prevented from entering production

- use roles and inline permissions to grant services access to other services and resources. This eliminates any need for access keys in your application code. Some services also support resource-based policies, allowing you to specify who has access to a given resource and the actions they may perform.

- be prepared for the worst. Have a plan in place that records what should be done if access keys are leaked. You should immediately invalidate exposed credentials and remove them from your version control system. You should then review what other systems these credentials might have exposed. For example, attackers may have used SSH to jump into another EC2 instance with more hardcoded credentials. Perhaps they logged into the AWS console to browse the secrets manager or dynamo database. CloudTrail can help you detect recent activity carried out by a given set of credentials.

|

Platform

|

Provisioning Automation |

Security Management |

Cost Management |

Regulatory Compliance |

Powered by Artificial Intelligence |

Native Hybrid Cloud Support

|

|---|---|---|---|---|---|---|

|

AWS Native Tools |

✔

|

✔

|

✔

|

|||

|

CoreStack

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

Conclusion

Managing access control across a complex AWS architecture can often be a daunting task. But with a little experience and by following these best practices, the process can be dramatically simplified. AWS provides many predefined policies which can be used for day-to-day tasks. The console and API also provide a user-friendly way of defining custom controls and processes. By encapsulating policies into code via CloudFormation, you can retain control and gain insight into how and where your permissions have been allocated. It also allows you to uniformly refine your policies to achieve the recommended principle of least privilege. By utilizing the many pre-existing AWS monitoring services, you can easily keep track of overall security. If in any doubt, you can always fall-back on the security pillar of the well-architected framework as your guide. And don’t forget, always follow the official IAM security best practices.