Cloud Cost Optimization Strategies & Best Practices

Cloud environments are often built as test projects that then evolve into production environments. Cost is often a consideration only after workloads scale out and organizations find themselves at the receiving end of unexpectedly large cloud bills. It feels overwhelming to start “turning things off,” and no engineer wants to be responsible for an outage or business disruption!

That’s where cloud cost optimization comes in. Deprovisioning environments when not in use and negotiating with vendors to benefit from committed spending are ways to reduce those cloud bills. More complex methods include optimizing environments with right-sized, elastic resources and refactoring applications to run in transient environments.

This article explores four key methods used to optimize cloud costs across organizations. We primarily focus on infrastructure-as-a-service (IaaS) resources, as platform-as-a-service (PaaS) optimization is service-specific, and software-as-a-service (SaaS) pricing is mainly a function of enterprise contract negotiations. You can significantly reduce expenditure by incorporating our cloud cost optimization methods into your organization’s existing strategy. Save on time-consuming reassessments by building new workloads with cost in mind from the beginning.

Summary of cloud cost optimization best practices

| Review and act upon production environment metrics. | Production environments often have varying workload demand through days, weeks, or seasons. Save money through strategies like:

|

| Turn off dev, test, and build environments when not in use. | Cloud environments have gotten faster at provisioning resources on-demand, and turning off resources may have no end-user impact. For a quick win, target holidays, weekends, and evenings first. |

| Refactor stateless workloads to function in temporary environments. | You can architect workloads to handle an unexpected shutdown and resume operations on a new instance. They can take advantage of “spot” or “preemptible” instance types that are massively discounted. |

| Buy contractually committed discounts from vendors. | If workloads are guaranteed or anticipated to run for several years, you can achieve cost savings of over 25% by committing to the usage upfront. You can also apply discounts across projects, compute families, and environments for extra flexibility. |

#1 Production environment metrics

Cloud cost optimization always begins with deeply understanding your workloads. Metrics operate like a dashboard in a car and are at the heart of cloud cost optimization. We review metrics first and reference them throughout the rest of the article.

Map traffic to infrastructure usage

The first goal is to match user traffic back to infrastructure usage and saturation. For example, if you know that:

- Every 10,000 users correlate to 20% of a VM’s CPU usage, and

- Your application’s quality begins to suffer when your CPU hits 90% saturation,

then you can estimate how much hardware is required for various levels of predictable traffic and when it is time to scale up. However, if the metrics you are using to autoscale conflict, your infrastructure ends up in a circular flow reacting to its own scaling and causing issues instead of basing decisions on real user traffic.

For externally facing applications, start with your existing analytics tooling, such as Adobe Omniture or Google Analytics, to document user traffic patterns. If your applications are internal, use department data to understand what times of day users are expected to be able to access environments. Focus on trends that repeat over time, correlate them with your platform metrics, and start by writing scripts that automatically shut down or start up applications as needed. You can do this in Google Cloud with a schedule, in AWS with the Instance Scheduler, and in Azure with Functions.

Incorporate auto-scaling

Maintaining at least one replica of each service ensures users can access them, but the real benefit of the cloud comes from incorporating auto-scaling and elastic capabilities. For example, if you can run one instance of your application overnight but quickly scale up to meet demand, your cloud costs scale with your usage instead of being “always on.” Moving away from dedicated schedules to actual elasticity requires a full understanding of the following:

- Your app's startup and shutdown requirements.

- Minimum availability and stateful requirements.

When introducing elastic scalability into your environments, you should also ensure bin packing—verifying that the workload optimizes its underlying infrastructure. Over-provisioned infrastructure wastes energy and money, and if your average VM utilization is consistently under 50%, then you’re likely overprovisioning your hardware. Right size your workloads by correctly matching them to resources for elastic scalability.

Know the platforms you are working on

Effective cloud cost optimization often relies on system administrators and engineers being fully trained in their optimizing platforms. For example:

- Kubernetes users should fully understand the difference between Horizontal Pod Autoscaler, Vertical Pod Autoscaling, and Cluster Autoscaler in each cloud provider.

- Oracle JVM users should know that the platform’s longer startup time requires them to spin up new instances when older ones reach 70% capacity.

- Fast-booting Python application administrators can wait for earlier instances to reach 90% capacity before scaling up.

After performing this analysis, setting schedules, and enabling autoscaling, cost engineers must ensure that SLAs are still being met and users have a positive experience. Creating a degraded runtime environment in the name of cost savings creates reputational harm. It instills doubts in SRE and DevOps teams, who become hesitant to focus on cloud cost optimization projects in the future.

#2 On-demand dev, test, and build environments

Many cloud environments go unused, and vendors provide tooling to see underutilized projects and resources. Once you understand your metrics and how your organization uses its infrastructure from the section above, you can focus on low-hanging fruit first. Turn off non-production environments when developers are offline, outside working hours, or on vacation.

Developer challenges

As developers are not responsible for costs, many build their environments once, leaving them running indefinitely. After all, it takes significant time and effort to set up dev environments, and no one wants to shut it all down and lose productivity gains. Developers are also not incentivized to focus on controlling costs in their sandbox environments.

Cloud cost optimization solutions for developers

You must create tooling for developers to provision their environments easily when needed so they don’t feel the need to keep them constantly running. Platform teams with DevOps training can provide a CLI-based experience to downstream engineers to meet the technical requirements of day-to-day work and the organization's financial needs.

Also, consider documenting and standardizing your deployment frequency based on existing CI/CD metrics. Use the data to ensure your build environment availability matches your release frequency. For instance, if you only release code weekly, the build environment can likely be shut down for six days. Most modern continuous deployment tools are also configurable to provision on-demand resources.

#3 Stateless workloads and ephemeral environments

Most mature organizations operating in the cloud are familiar with elasticity, right-sizing, and de-provisioning resources to focus on an on-demand strategy. A less-known method to introduce significant cost savings is migrating to cloud infrastructure that shuts down randomly.

For context, spot and preemptible instances are built out of the excess cloud capacity in vendor data centers. Most vendors provide highly discounted VM options to help sell the “unused space” between other customer VMs, and they offer large incentives if you can design your applications to fill those gaps. Some discounts run as deep as 90% to promote the usage of these virtual machines.

Move from monolith to microservices.

Leveraging spot and preemptible virtual machine instances can be problematic for most applications – especially monoliths. Instead, you can refactor your applications to use a microservices pattern that supports termination in an unexpected shutdown situation. Most vendors also attempt to provide a grace period for the shutdown and allow an app to terminate altogether. While the workload can be evicted anytime, your app can still try a proper shutdown. However, these shutdown windows are not guaranteed and may only last between 15 and 30 seconds.

After designing SLAs and architecting for 99.99% availability, many engineers can’t fathom moving their workloads to VMs that intentionally introduce chaos into their environments. However, when done correctly, it can increase the resilience of the applications, help the teams running those applications understand their weak spots, and save the organization money.

#4 Purchasing contractual commitments

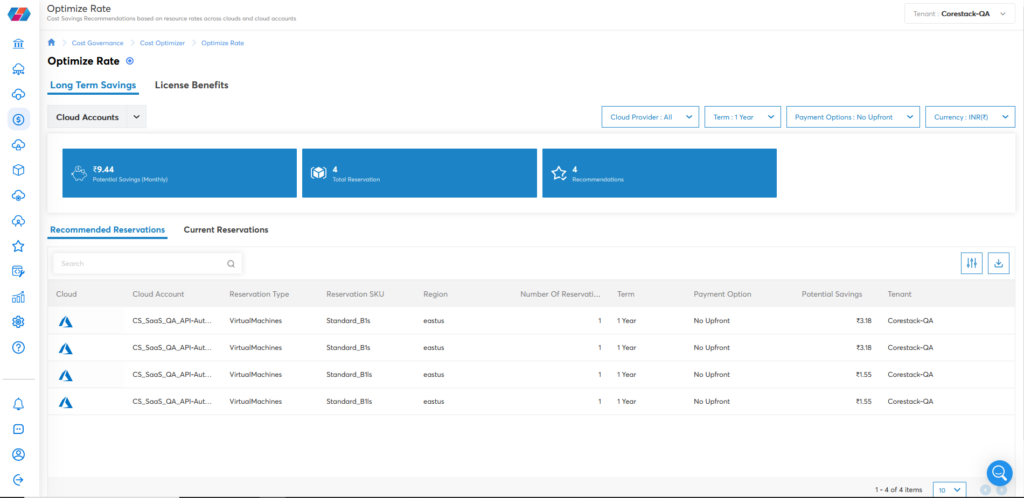

One of the easiest ways to save money in the cloud is by committing to resource usage. Cloud service providers offer discounts because they use the information from committed contracts to plan data center capacity and forecast long-term revenue for their investors. For example, Azure and AWS offer reserved instances, and Google Cloud provides committed use discounts to incentivize organizations to make up-front one-year or three-year commitments to their VM or database instances.

Most cloud vendors also offer flexibility to apply these discounts across different projects and VM families to ensure you can still use them if your applications or underlying architecture changes. They care most about receiving the revenue, not where you apply the discount.

|

Platform

|

Provisioning Automation |

Security Management |

Cost Management |

Regulatory Compliance |

Powered by Artificial Intelligence |

Native Hybrid Cloud Support

|

|---|---|---|---|---|---|---|

|

Cloud Native Tools |

✔

|

✔

|

✔

|

|||

|

CoreStack

|

✔

|

✔

|

✔

|

✔

|

✔

|

✔

|

Conclusion

Cloud cost optimization typically becomes a concern only after cloud resources have been deployed. Building an understanding of your infrastructure is an excellent starting point. Once you understand usage, you can scale, right-size, destroy, and refactor your workloads.

However, cloud environments become the most cost-effective when you implement best practices from the start. There are opportunities for intentional cloud architecture to optimize resources for long-term savings. You can use cloud governance platforms like CoreStack to optimize your cloud spending while forecasting for the future.